17 Definitions of the Technological Singularity

The term singularity has many meanings.

The term singularity has many meanings.

The everyday English definition is a noun that designates the quality of being one of a kind, strange, unique, remarkable or unusual.

If we want to be even more specific, we might take the Wiktionary definition of the term, which seems to be more contemporary and easily comprehensible, as opposed to those in classic dictionaries such as the Merriam-Webster’s.

So, the Wiktionary lists the following five meanings:

So, the Wiktionary lists the following five meanings:

Noun

singularity (plural singularities)

1. the state of being singular, distinct, peculiar, uncommon or unusual

2. a point where all parallel lines meet

3. a point where a measured variable reaches unmeasurable or infinite value

4. (mathematics) the value or range of values of a function for which a derivative does not exist

5. (physics) a point or region in spacetime in which gravitational forces cause matter to have an infinite density; associated with Black Holes

What we are most interested in, however, is the definition of singularity as a technological phenomenon — i.e. the technological singularity. Here we can find an even greater variety of subtly different interpretations and meanings. Thus it may help if we have a list of what are arguably the most relevant ones, arranged in a rough chronological order.

Seventeen Definitions of the Technological Singularity:

1. R. Thornton, editor of the Primitive Expounder

In 1847, R. Thornton wrote about the recent invention of a four function mechanical calculator:

“…such machines, by which the scholar may, by turning a crank, grind out the solution of a problem without the fatigue of mental application, would by its introduction into schools, do incalculable injury. But who knows that such machines when brought to greater perfection, may not think of a plan to remedy all their own defects and then grind out ideas beyond the ken of mortal mind!”

It was during the relatively low-tech mid 19th century that Samuel Butler wrote his Darwin among the Machines. In it, Butler combined his observations of the rapid technological progress of the Industrial Revolution and Charles Darwin’s theory of the evolution of the species. That synthesis led Butler to conclude that the technological evolution of the machines will continue inevitably until the point that eventually machines will replace men altogether. In Erewhon Butler argued that:

“There is no security against the ultimate development of mechanical consciousness, in the fact of machines possessing little consciousness now. A mollusc has not much consciousness. Reflect upon the extraordinary advance which machines have made during the last few hundred years, and note how slowly the animal and vegetable kingdoms are advancing. The more highly organized machines are creatures not so much of yesterday, as of the last five minutes, so to speak, in comparison with past time.”

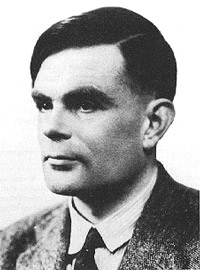

3. Alan Turing

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence:

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence:

“once the machine thinking method has started, it would not take long to outstrip our feeble powers. … At some stage therefore we should have to expect the machines to take control, in the way that is mentioned in Samuel Butler’s Erewhon.”

In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.”

In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.”

5. I.J. Good, who greatly influenced Vernor Vinge, never used the term singularity itself. However, what Vinge later called singularity Good called intelligence explosion. By that I. J. meant a positive feedback cycle within which minds will make technology to improve on minds which once started will rapidly surge upwards and create super-intelligence:

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.”

6. Vernor Vinge introduced the term technological singularity in the January 1983 issue of Omni magazine in a way that was specifically tied to the creation of intelligent machines:

“We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.”

“We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.”

He later developed further the concept in his essay the Coming Technological Singularity (1993):

“Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended. […] I think it’s fair to call this event a singularity. It is a point where our models must be discarded and a new reality rules. As we move closer and closer to this point, it will loom vaster and vaster over human affairs till the notion becomes a commonplace. Yet when it finally happens it may still be a great surprise and a greater unknown.”

It is important to stress that for Vinge the singularity could occur in four ways: 1. The development of computers that are “awake” and superhumanly intelligent. 2. Large computer networks (and their associated users) may “wake up” as a superhumanly intelligent entity. 3. Computer/human interfaces may become so intimate that users may reasonably be considered superhumanly intelligent. 4. Biological science may find ways to improve upon the natural human intellect. [Vernor talks about the singularity after min 2:13 in the video below]

7. Hans Moravec:

In his 1988 book Mind Children, computer scientist and futurist Hans Moravec generalizes Moore’s Law to make predictions about the future of artificial life. Hans argues that starting around 2030 or 2040, robots will evolve into a new series of artificial species, eventually succeeding homo sapiens. In his 1993 paper The Age of Robots Moravek writes:

“Our artifacts are getting smarter, and a loose parallel with the evolution of animal intelligence suggests one future course for them. Computerless industrial machinery exhibits the behavioral flexibility of single-celled organisms. Today’s best computer-controlled robots are like the simpler invertebrates. A thousand-fold increase in computer power in this decade should make possible machines with reptile-like sensory and motor competence. Properly configured, such robots could do in the physical world what personal computers now do in the world of data–act on our behalf as literal-minded slaves. Growing computer power over the next half-century will allow this reptile stage will be surpassed, in stages producing robots that learn like mammals, model their world like primates and eventually reason like humans. Depending on your point of view, humanity will then have produced a worthy successor, or transcended inherited limitations and transformed itself into something quite new. No longer limited by the slow pace of human learning and even slower biological evolution, intelligent machinery will conduct its affairs on an ever faster, ever smaller scale, until coarse physical nature has been converted to fine-grained purposeful thought.”

In Industrial Society and Its Future (aka the “Unabomber Manifesto”) Ted Kaczynski tried to explain, justify and popularize his militant resistance to technological progress:

In Industrial Society and Its Future (aka the “Unabomber Manifesto”) Ted Kaczynski tried to explain, justify and popularize his militant resistance to technological progress:

“… the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines decisions. As society and the problems that face it become more and more complex and machines become more and more intelligent, people will let machines make more of their decision for them, simply because machine-made decisions will bring better result than man-made ones. Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently. At that stage the machines will be in effective control. People won’t be able to just turn the machines off, because they will be so dependent on them that turning them off would amount to suicide.”

9. Nick Bostrom

In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote How Long Before Superintelligence. In it Bostrom seems to embrace I.J. Good’s intelligence explosion thesis with his notion of superintelligence:

In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote How Long Before Superintelligence. In it Bostrom seems to embrace I.J. Good’s intelligence explosion thesis with his notion of superintelligence:

“By a “superintelligence” we mean an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills. This definition leaves open how the superintelligence is implemented: it could be a digital computer, an ensemble of networked computers, cultured cortical tissue or what have you. It also leaves open whether the superintelligence is conscious and has subjective experiences.”

10. Ray Kurzweil

Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book The Singularity Is Near Kurzweil defines the technological singularity as:

Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book The Singularity Is Near Kurzweil defines the technological singularity as:

“… a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed. Although neither utopian nor dystopian, this epoch will transform the concepts that we rely on to give meaning to our lives, from our business models to the cycle of human life, including death itself.”

11. Kevin Kelly, senior maverick and co-founder of Wired Magazine

Singularity is the point at which “all the change in the last million years will be superseded by the change in the next five minutes.”

In 2007 Eliezer Yudkowsky pointed out that singularity definitions fall within three major schools: Accelerating Change, the Event Horizon, and the Intelligence Explosion. He also argued that many of the different definitions assigned to the term singularity are mutually incompatible rather than mutually supporting. For example, Kurzweil extrapolates current technological trajectories past the arrival of self-improving AI or superhuman intelligence, which Yudkowsky argues represents a tension with both I. J. Good’s proposed discontinuous upswing in intelligence and Vinge’s thesis on unpredictability. Interestingly, Yudkowsky places Vinge’s original definition within the event horizon camp while placing his own self within the Intelligence Explosion school. (In my opinion Vinge is equally within the Intelligence Explosion and Event Horizon ones.)

In 2007 Eliezer Yudkowsky pointed out that singularity definitions fall within three major schools: Accelerating Change, the Event Horizon, and the Intelligence Explosion. He also argued that many of the different definitions assigned to the term singularity are mutually incompatible rather than mutually supporting. For example, Kurzweil extrapolates current technological trajectories past the arrival of self-improving AI or superhuman intelligence, which Yudkowsky argues represents a tension with both I. J. Good’s proposed discontinuous upswing in intelligence and Vinge’s thesis on unpredictability. Interestingly, Yudkowsky places Vinge’s original definition within the event horizon camp while placing his own self within the Intelligence Explosion school. (In my opinion Vinge is equally within the Intelligence Explosion and Event Horizon ones.)

In Why Confuse or Dilute a Perfectly Good Concept Michael writes:

In Why Confuse or Dilute a Perfectly Good Concept Michael writes:

“The original definition of the Singularity centers on the idea of a greater-than-human intelligence accelerating progress. No life extension. No biotechnology in general. No nanotechnology in general. No human-driven progress. No flying cars and other generalized future hype…”

According to the above definition, and in contrast to his SIAI colleague Eliezer Yudkowsky, it would seem that Michael falls both within the Intelligence Explosion and Accelerating Change schools. (In an earlier article, Anissimov defines the singularity as transhuman intelligence.)

14. John Smart

On his Acceleration Watch website John Smart writes:

“Some 20 to 140 years from now—depending on which evolutionary theorist, systems theorist, computer scientist, technology studies scholar, or futurist you happen to agree with—the ever-increasing rate of technological change in our local environment is expected to undergo a permanent and irreversible developmental phase change, or technological “singularity,” becoming either:

A. fully autonomous in its self-development,

B. human-surpassing in its mental complexity, or

C. effectively instantaneous in self-improvement (from our perspective),

or if only one of these at first, soon after all of the above. It has been postulated by some that local environmental events after this point must also be “future-incomprehensible” to existing humanity, though we disagree.”

15. James Martin

James Martin – a world-renowned futurist, computer scientist, author, lecturer and, among many other things, the largest donor in the history of Oxford University – the Oxford Martin School, defines the singularity as follows:

Singularity “is a break in human evolution that will be caused by the staggering speed of technological evolution.”

16. Sean Arnott: “The technological singularity is when our creations surpass us in our understanding of them vs their understanding of us, rendering us obsolete in the process.”

17. Your Definition of the Technological Singularity?!…

As we can see there is a large variety of flavors when it comes to defining the technological singularity. I personally tend to favor what I would call the original Vingean definition, as inspired by I.J. Good’s intelligence explosion because it stresses both the crucial importance of self-improving super-intelligence as well as its event horizon-type of discontinuity and uniqueness. (I also sometimes define the technological singularity as the event, or sequence of events, likely to occur right at or shortly after the birth of strong artificial intelligence.)

At the same time, after all of the above definitions it has to be clear that we really do not know what the singularity is (or will be). Thus we are just using the term to show (or hide) our own ignorance.

But tell me – what is your own favorite definition of the technological singularity?